2 Preliminaries: Communicating Systems and Games

The core background of this thesis is the trinity of characterizations for behavioral equivalences: relational, modal, and game-theoretic.

This chapter takes a tour into the field of formalisms used to model programs and communicating systems, which are accompanied by many notions for behavioral equivalence and refinement to relate or distinguish their models. As core formalisms we use transition systems and the Calculus of Communicating Systems (CCS) in Section 2.1.

The tour is agnostic, building on the basic formalism of labeled transition systems and standard equivalences such as trace equivalence and bisimilarity. Simultaneously, the approach is opinionated, focusing on Milner’s tradition of concurrency theory with a strong pull towards game characterizations.

Figure 2.1 shows the scaffold of this section along the trinity, instantiated to the classic notion of bisimilarity:

- Section 2.2 introduces (among other notions) bisimilarity through its relational characterization in terms of symmetric simulation relations.

- Section 2.3 treats the dual connection of bisimilarity and the distinguishing powers of a modal logic, known as the Hennessy–Milner theorem.

- Section 2.4 shows that both, the relations to validate bisimilarity and the modal formulas to falsify it, appear as certificates of strategies in a reachability game where an attacker tries to tell states apart and a defender tries to prevent this.

A reader familiar with the contents of Figure 2.1 might mostly skim through this chapter of preliminaries (although they are quite exciting!). This chapter aims to seed two core insights for the upcoming chapters:

Equivalence games are a versatile way to handle behavioral equivalences and obtain decision procedures. The Hennessy–Milner theorem appears as a shadow of the determinacy of the bisimulation reachability game.

We will move towards motivating the games through logics.

Modal characterizations allow a uniform handling of the hierarchy of equivalences. Productions in grammars of potential distinguishing formulas translate to game rules for equivalence games.

Both points might seem non-standard to those more accustomed to relational or denotational definitions of behavioral equivalences. To provide them with a bridge, our presentation starts with relational and denotational definitions—but once we have crossed this bridge, we will stay in the realm of games and logics, putting these first.

2.1 Behavior of Programs

Every computer scientist has some model in their head of what it is that their algorithms and programs are doing. Usually these models are wrong,1 especially, once concurrency enters the picture. The area of formal methods tries to make models sufficiently precise that one, at least, can say what went wrong.

1 But some are useful, as the saying goes.

2.1.1 Labeled Transition Systems

Labeled transition systems are the standard formalism to discretely express the state space of programs, algorithms, and more. One can think of them as nondeterministic finite automata without finiteness constraint.

Definition 2.1 (Transition systems) A transition system \mathcal{S}=(\mathcal{P},\mathit{Act},\xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{}$}}}) consists of

- \mathcal{P}, a set of states,

- \mathit{Act}, a set of actions, and

- {\xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{}$}}}} ⊆ \mathcal{P}× \mathit{Act}× \mathcal{P}, a transition relation.

We use infix notation for arrows, that is, p \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{\alpha}$}}} p' stands for (p, \alpha, p') \in {\xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{}$}}}}. We write \operatorname{Der}(p, α) for the set of derivative states \{p' \mid p \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}} p'\} and \operatorname{Ini}(p) for the set of enabled actions \{α \mid \exists p' \ldotp p \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}} p'\}. We sometimes lift transitions to sets P, P' \subseteq \mathcal{P} with P \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{\alpha}$}}} P' iff P' = \{p' \in \mathcal{P}\mid \exists p \in P \ldotp p \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{\alpha}$}}} p'\}.

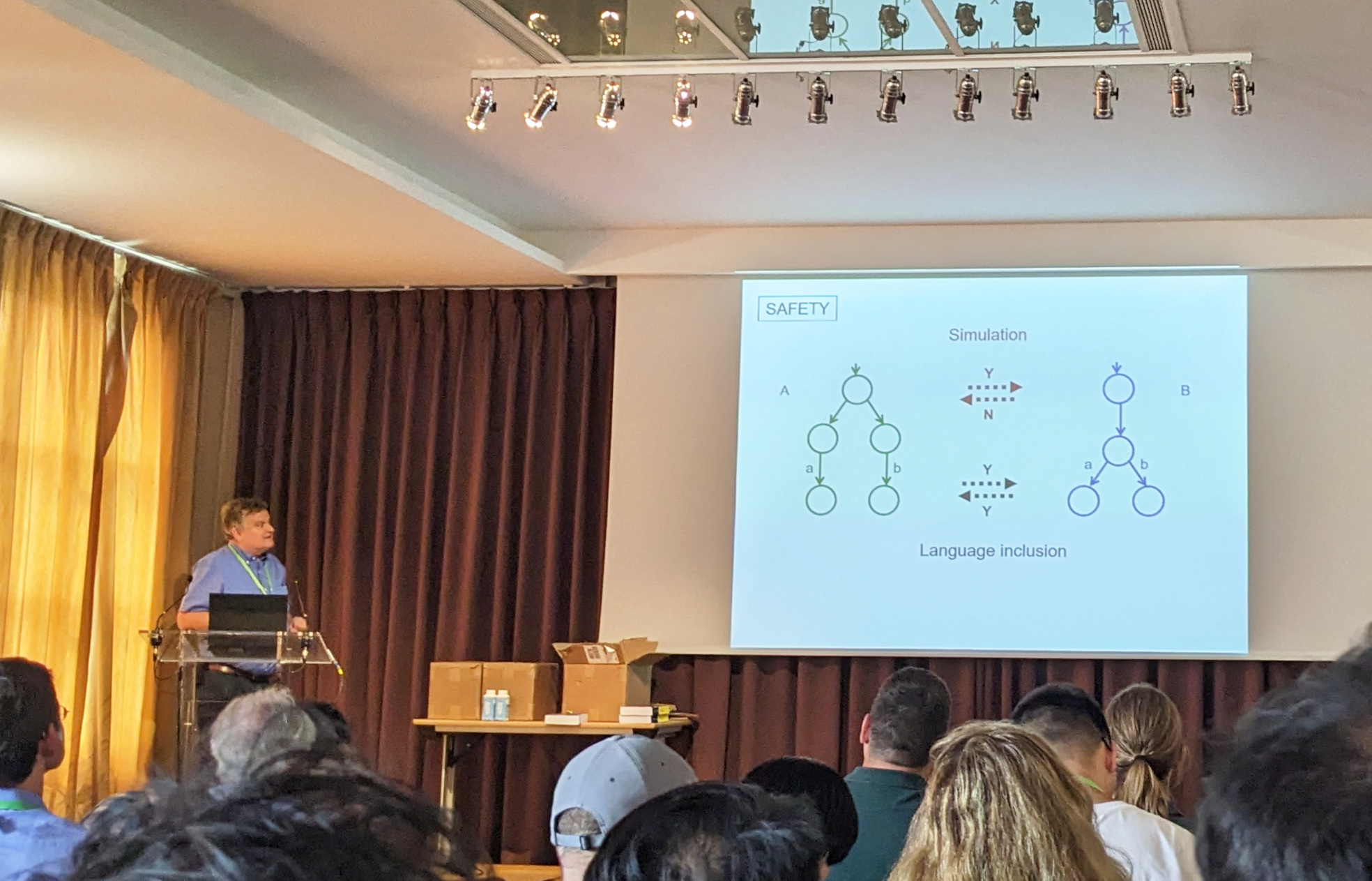

There is a canonical example used to discuss equivalences within transition systems, which we want to draw from. We will take the formulation that Henzinger used at CAV’23 as seen in Figure 2.2.

Example 2.1 (A classic example) Let us consider the labeled transition system \mathcal{S}_\mathsf{PQ} = (\{\mathsf{P}, \mathsf{p_a}, \mathsf{p_b}, \mathsf{p_1}, \mathsf{p_2}, \mathsf{Q}, \mathsf{q_{ab}}, \mathsf{q_1}, \mathsf{q_2}\}, \{\mathsf{a}, \mathsf{b}, τ\}, \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{}$}}}_\mathsf{PQ}) given by the graph in Figure 2.3.

The program described by the transitions from \mathsf{P} chooses nondeterministically during a τ-step between two options and then offers only either \mathsf{a} or \mathsf{b}. The program of \mathsf{Q}, on the other hand, performs a τ-step and then offers the choice between options \mathsf{a} and \mathsf{b} to the environment.

There are two things one might wonder about Example 2.1:

- Should one care about nondeterminism in programs? Section 2.1.2 shows how nondeterminism arises naturally in concurrent programs. And, of course, many specifications leave undefind behavior.

- Should one consider \mathsf{P} and \mathsf{Q} equivalent? This heavily depends. Section 2.2 will introduce a notion of equivalence under which the two are equivalent and one under which they differ.

Remark 2.1 (A note on τ). The action τ (the Greek letter “tau”) will stand for internal behavior in later chapters and receive special treatment in Chapter 6. For the scope of this chapter and the following three, τ is an action like every other.

Generally, this thesis aims to be consistent with notation and naming in surrounding literature. For the internal action, the whole field has converged to τ in italics—so, we will run with this. Otherwise, we follow the convention to write literals and constant names in \textsf{sans-serif} and variables in \textit{italics}.

2.1.2 Calculus of Communicating Systems

To talk about programs in this thesis, we use Milner’s (1980) Calculus of Communicating Systems (CCS), which—together with other great contributions—earned him the Turing award. It is a tiny concurrent programming language that fits in your pocket, and can be properly described mathematically!2

2 To those not familiar with CCS: The following exposition of CCS is quite condensed. Gentler introduction can be found in textbooks like Milner (1989) or Aceto et al. (2007) and in the interactive learning experience https://book.pseuco.com/.

Definition 2.2 (Syntax of \textsf{CCS}) Let \mathcal{C} be a set of channel names, and \mathcal X a set of process names. Then, \textsf{CCS} is the set of processes given by the following grammar:3 \begin{array}{cllr} P \;::=\; & c\ldotp\! P & \quad\text{with } c ∈ \mathcal{C}& \text{“input action prefix”} \\ & \overline{c}\ldotp\! P & \quad\text{with } c ∈ \mathcal{C}& \text{“output action prefix”} \\ & τ\ldotp\! P & & \text{“internal action”} \\ & \mathbf{0}& & \text{“null process”} \\ & X & \quad\text{with } X ∈ \mathcal X& \text{“recursion”} \\ & P +P & & \text{“choice”} \\ & P \, \mid\, P & & \text{“parallel composition”} \\ & P \, \mathbin{\backslash}A & \quad\text{with } A ⊆ \mathcal{C}& \text{“restriction”} \end{array} Intuitively, input action c represents receiving and output action \overline{c} expresses sending on channel c \in \mathcal{C}. A pair of input and output can “react” in a communication situation and only become internal activity τ in the view of the environment. Taken together, CCS processes exhibit the actions \mathsf{Act}_\textsf{CCS}≔ \mathcal{C}\cup \{ \overline{c} \mid c ∈ \mathcal{C}\} \cup \{τ\}.

3 To those familiar with CCS dialects: For the examples of the thesis, a simple CCS variant without value passing and relabeling suffices.

Each part of the syntax tree must end in a \mathbf{0}-process or recursion. For brevity, we usually drop a terminal \mathbf{0} when writing terms, e.g., just writing \mathsf{ac} for \mathsf{ac}\ldotp\! \mathbf{0}.

We place parenthesis (…) in terms where the syntax trees are otherwise ambiguous, but understand the choice operator + and the parallel operator \mid to be associative. This convention means that (P_1 +P_2) +P_3 and P_1 +(P_2 +P_3) are representations of the same term in our view.

Example 2.2 (Concurrent philosophers) Following tradition, we express our examples in terms of philosophers who need forks to eat spaghetti.4 Consider two philosophers \mathsf{P_A} and \mathsf{P_B} (from Figure 2.4) who want to grab a resource \mathsf{fork}, in order to eat, which we model as receiving communication. We express \mathsf{P_A} eating with \mathsf{a} and \mathsf{P_B} eating with \mathsf{b}. The philosopher processes read: \begin{gathered} \mathsf{P_A} ≔ \mathsf{fork}\ldotp\! \mathsf{a}\ldotp\! \mathbf{0}\\ \mathsf{P_B} ≔ \mathsf{fork}\ldotp\! \mathsf{b}\ldotp\! \mathbf{0} \end{gathered} A transition system representation of \mathsf{P_A}’s behavior can be seen in Figure 2.5. Process \mathsf{P} captures the whole scenario where the two philosophers compete for the fork using communication: \mathsf{P} ≔ ( \overline{\mathsf{fork}}\ldotp\! \mathbf{0}\mid\mathsf{P_A} \mid\mathsf{P_B} ) \mathbin{\backslash}\{\mathsf{fork}\} The restriction … \mathbin{\backslash}\{\mathsf{fork}\} expresses that the \mathsf{fork}-channel can only be used for communication within the system.

4 The philosopher meme is due to Dijkstra and Hoare (1985). Of course, you can just as well read the examples to be about computer programs that race for resources.

As the \overline{\mathsf{fork}}-message can be consumed by just one of the two philosophers, process \mathsf{P} expresses exactly the program behavior seen in state \mathsf{P} of Example 2.1. A process matching \mathsf{Q} will follow in Example 2.3.

The formal relationship between process terms of \textsf{CCS} and their transition system is established by the following semantics.

Definition 2.3 (CCS semantics) Given an assignment of names to processes, \mathcal V\colon \mathcal X→ \textsf{CCS}, the operational semantics {\xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{}$}}}_\textsf{CCS}} ⊆ \textsf{CCS}× \mathsf{Act}_\textsf{CCS}× \textsf{CCS} is defined inductively by the inference rules of Figure 2.6.5 A process P ∈ \textsf{CCS} now denotes a state within system (\textsf{CCS}, \mathsf{Act}_\textsf{CCS}, \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{}$}}}_\textsf{CCS}).

5 Such rules are read like this: “If we can derive the facts above the line, we may infer the relation below.” Rule \mathrm{Pre}, having no premises, serves as the only axiom of this inference system. Figure 2.7 shows how to stack such rules to infer that a certain step is possible for a process.

\mathrm{Pre}\;\dfrac{\begin{matrix} \end{matrix}}{ α\ldotp\! P \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}}_\textsf{CCS}P }\hphantom{\mathrm{Pre}} \qquad \mathrm{Rec}\;\dfrac{\begin{matrix} P \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}}_\textsf{CCS}P' \qquad \mathcal V(X) = P \end{matrix}}{ X \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}}_\textsf{CCS}P' }\hphantom{\mathrm{Rec}} \mathrm{Choice_1}\;\dfrac{\begin{matrix} P_1 \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}}_\textsf{CCS}P_1' \end{matrix}}{ P_1 +P_2 \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}}_\textsf{CCS}P_1' }\hphantom{\mathrm{Choice_1}}\qquad \mathrm{Choice_2}\;\dfrac{\begin{matrix} P_2 \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}}_\textsf{CCS}P_2' \end{matrix}}{ P_1 +P_2 \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}}_\textsf{CCS}P_2' }\hphantom{\mathrm{Choice_2}} \mathrm{Par_1}\;\dfrac{\begin{matrix} P_1 \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}}_\textsf{CCS}P_1' \end{matrix}}{ P_1 \mid P_2 \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}}_\textsf{CCS}P_1' \mid P_2 }\hphantom{\mathrm{Par_1}}\qquad \mathrm{Par_2}\;\dfrac{\begin{matrix} P_2 \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}}_\textsf{CCS}P_2' \end{matrix}}{ P_1 \mid P_2 \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}}_\textsf{CCS}P_1 \mid P_2' }\hphantom{\mathrm{Par_2}} \mathrm{Com_1}\;\dfrac{\begin{matrix} P_1 \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{c}$}}}_\textsf{CCS}P_1' \qquad P_2 \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{\overline{c}}$}}}_\textsf{CCS}P_2' \end{matrix}}{ P_1 \mid P_2 \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{τ}$}}}_\textsf{CCS}P_1' \mid P_2' }\hphantom{\mathrm{Com_1}}\; \mathrm{Com_2}\;\dfrac{\begin{matrix} P_1 \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{\overline{c}}$}}}_\textsf{CCS}P_1' \qquad P_2 \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{c}$}}}_\textsf{CCS}P_2' \end{matrix}}{ P_1 \mid P_2 \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{τ}$}}}_\textsf{CCS}P_1' \mid P_2' }\hphantom{\mathrm{Com_2}} \mathrm{Res}\;\dfrac{\begin{matrix} P \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}}_\textsf{CCS}P' \qquad \nexists c \in A \ldotp α = c \lor α = \overline{c} \end{matrix}}{ P \mathbin{\backslash}A \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}}_\textsf{CCS}P' \mathbin{\backslash}A }\hphantom{\mathrm{Res}}

So, when we were writing “\mathsf{P_A} ≔ \mathsf{fork}\ldotp\! \mathsf{a}\ldotp\! \mathbf{0}” above, this was actually to claim that we are talking about a CCS system where the value of \mathcal V for the process name \mathsf{P_A} \in \mathcal X is defined by \mathcal V(\mathsf{P_A}) ≔ \mathsf{fork}\ldotp\! \mathsf{a}\ldotp\! \mathbf{0}. By the semantics, this also leads to the existence of a state \mathsf{P_A} in the CCS transition system, and usually that is the entity we are interested in when defining a process.

Feel free to go ahead and check that the transitions of Example 2.1 indeed match those that Definition 2.3 prescribes for \mathsf{P} of Example 2.2! (For readability, Example 2.1, has shorter state names, however.) For instance, the transition \mathsf{P} \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{τ}$}}} \mathsf{p_a} in the CCS system of Example 2.1 would be justified by the derivation in Figure 2.7 with \mathsf{p_a} = ( \mathbf{0}\mid\mathsf{a} \mid\mathsf{P_B} ) \mathbin{\backslash}\{\mathsf{fork}\}.

\mathrm{Rec}\;\dfrac{\begin{matrix} \hspace{-2em} \mathrm{Res}\;\dfrac{\begin{matrix} \hspace{-2em} \mathrm{Com_2}\;\dfrac{\begin{matrix} \hspace{-2em} \mathrm{Pre}\;\dfrac{\begin{matrix} \vphantom{\xrightarrow{{\raisebox{-2pt}{$\scriptstyle{\overline{\mathsf{fork}}}$}}}_\textsf{CCS}} \end{matrix}}{ \overline{\mathsf{fork}} \xrightarrow{{\raisebox{-2pt}{$\scriptstyle{\overline{\mathsf{fork}}}$}}}_\textsf{CCS}\mathbf{0} } \quad \mathrm{Par_1}\;\dfrac{\begin{matrix} \hspace{-2em} \mathrm{Rec}\;\dfrac{\begin{matrix} \hspace{-2em} \mathrm{Pre}\;\dfrac{\begin{matrix} \end{matrix}}{ \mathsf{fork}\ldotp\! \mathsf{a} \xrightarrow{{\raisebox{-2pt}{$\scriptstyle{\mathsf{fork}}$}}}_\textsf{CCS} \mathsf{a} } \qquad \begin{array}{c} \vphantom{\mathcal V\xrightarrow{{\raisebox{-2pt}{$\scriptstyle{\mathsf{fork}}$}}}_\textsf{CCS}} \\ \vphantom{\xrightarrow{{\raisebox{-2pt}{$\scriptstyle{\mathsf{fork}}$}}}_\textsf{CCS}} \mathcal V(\mathsf{P_A}) = \mathsf{fork}\ldotp\! \mathsf{a} \end{array} \end{matrix}}{ \mathsf{P_A} \xrightarrow{{\raisebox{-2pt}{$\scriptstyle{\mathsf{fork}}$}}}_\textsf{CCS}\mathsf{a} } \end{matrix}}{ \mathsf{P_A} \mid\mathsf{P_B} \xrightarrow{{\raisebox{-2pt}{$\scriptstyle{\mathsf{fork}}$}}}_\textsf{CCS}\mathsf{a} \mid\mathsf{P_B} } \end{matrix}}{ \overline{\mathsf{fork}} \mid\mathsf{P_A} \mid\mathsf{P_B} \xrightarrow{{\raisebox{-2pt}{$\scriptstyle{τ}$}}}_\textsf{CCS} \mathbf{0}\mid\mathsf{a} \mid\mathsf{P_B} } \hspace{-1em} \begin{matrix} \vphantom{\xrightarrow{{\raisebox{-2pt}{$\scriptstyle{\overline{\mathsf{fork}}}$}}}_\textsf{CCS}}\\ τ \notin \{\mathsf{fork}, \overline{\mathsf{fork}}\} \end{matrix} \end{matrix}}{ ( \overline{\mathsf{fork}} \mid\mathsf{P_A} \mid\mathsf{P_B} ) \mathbin{\backslash}\{\mathsf{fork}\} \xrightarrow{{\raisebox{-2pt}{$\scriptstyle{τ}$}}}_\textsf{CCS} ( \mathbf{0}\mid\mathsf{a} \mid\mathsf{P_B} ) \mathbin{\backslash}\{\mathsf{fork}\} } \hspace{-1em} \begin{matrix} \vphantom{\xrightarrow{{\raisebox{-2pt}{$\scriptstyle{\overline{\mathsf{fork}}}$}}}_\textsf{CCS}}\\ \mathcal V(\mathsf{P}) = ( \overline{\mathsf{fork}} \mid\mathsf{P_A} \mid\mathsf{P_B} ) \mathbin{\backslash}\{\mathsf{fork}\} \end{matrix} \end{matrix}}{ \mathsf{P} \xrightarrow{{\raisebox{-2pt}{$\scriptstyle{τ}$}}}_\textsf{CCS}( \mathbf{0}\mid\mathsf{a} \mid\mathsf{P_B} ) \mathbin{\backslash}\{\mathsf{fork}\} }

Therefore, after one step from \mathsf{P}, the (internal) \mathsf{fork} has been consumed, and only one philosopher may eat. From the outside, this appears as nondeterminism of the system.

Nondeterminism as in \mathsf{P} of Example 2.1 can be understood as a natural phenomenon in models with concurrency. The model leaves unspecified which of two processes will consume an internal resource and, to the outsider, it is transparent which one took the resource until they communicate. There are other ways how nondeterminism plays a crucial role in models, for instance, as consequence of abstraction or of parts that are left open in specifications.

The second process \mathsf{Q} of Example 2.1 can be understood as a deterministic sibling of \mathsf{P}.

Example 2.3 (Deterministic philosophers) A process matching the transitions from \mathsf{Q} in Example 2.1 would be the following, where the philosophers take the fork as a team and then let the environment choose who of them eats: \mathsf{Q} ≔ (\overline{\mathsf{fork}}\ldotp\! \mathbf{0}\mid\mathsf{fork}\ldotp\! ( \mathsf{a}\ldotp\! \mathbf{0}+\mathsf{b}\ldotp\! \mathbf{0})) \mathbin{\backslash}\{\mathsf{fork}\}.

But are \mathsf{P} and \mathsf{Q} equivalent?

2.2 Behavioral Equivalences

A behavioral equivalence formally defines when to consider two processes (or states, or programs) as equivalent. Evidently, there are different ways of choosing such a notion of equivalence. Also, sometimes we are interested in a behavioral preorder, for instance, as a way of saying that a program does “less than” what some specification allows. Such a relationship is often also called refinement or inclusion, but we will go with preorder.

This section quickly introduces the most common representatives of behavioral equivalences: Trace equivalence (and preorder), simulation equivalence (and preorder), and bisimilarity. We will then observe that the notions themselves can be compared in a hierarchy of equivalences.

2.2.1 Trace Equivalence

Every computer science curriculum features automata and their languages sometime at the beginning. Accordingly, comparing two programs in terms of the sequences of input/output events they might expose is a natural starting point to talk about behavioral equivalences. Such sequences of actions are referred to as traces.

Definition 2.4 (Traces) The set of traces of a state \mathsf{Traces}(p) \subseteq \mathit{Act}^* is inductively defined as

- \texttt{()}∈ \mathsf{Traces}(p),6

- α {\vec w} ∈ \mathsf{Traces}(p) if there is p' with p \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}} p' and \vec w ∈ \mathsf{Traces}(p').7

6 We denote the empty word by \texttt{()}.

abbreviation Labeled_Transition_Systems.lts.traces

abbreviation Labeled_Transition_Systems.lts.tracesDefinition 2.5 (Trace equivalence) Two states p and q are considered trace-equivalent, written p \sim_\mathrm{T} q, if \mathsf{Traces}(p) = \mathsf{Traces}(q).8

abbreviation Strong_Equivalences.lts.trace_equivalent

abbreviation Strong_Equivalences.lts.trace_equivalent definition Strong_Equivalences.lts.trace_preordered

definition Strong_Equivalences.lts.trace_preorderedStates are trace-preordered, p \preceq_\mathrm{T} q, if \mathsf{Traces}(p) ⊆ \mathsf{Traces}(q).9

Example 2.4 The traces for the processes of Example 2.1 would be \mathsf{Traces}(\mathsf{P}) = \{\texttt{()}, τ, τ\mathsf{a}, τ\mathsf{b}\} = \mathsf{Traces}(\mathsf{Q}). Consequently, \mathsf{P} and \mathsf{Q} are trace-equivalent, \mathsf{P} \sim_\mathrm{T} \mathsf{Q}.

As \mathsf{Traces}(\mathsf{p_a}) = \{\texttt{()}, \mathsf{a}\} ⊆ \{\texttt{()}, \mathsf{a}, \mathsf{b}\} = \mathsf{Traces}(\mathsf{q_{ab}}), \mathsf{p_a} is trace-preordered to \mathsf{q_{ab}}, \mathsf{p_a} \preceq_\mathrm{T} \mathsf{q_{ab}}. This ordering is strict, that is, \mathsf{q_{ab}} \not\preceq_\mathrm{T} \mathsf{p_a}, due to \mathsf{b} ∈ \mathsf{Traces}(\mathsf{q_{ab}}) but \mathsf{b} \notin \mathsf{Traces}(\mathsf{p_a}). We could say that trace \mathsf{b} constitutes a difference between \mathsf{q_{ab}} and \mathsf{p_a}.

Proposition 2.1 Trace preorder \preceq_\mathrm{T} is indeed a preorder (i.e., transitive and reflexive)10 and trace equivalence \sim_\mathrm{T} is indeed an equivalence relation11 (i.e., transitive, reflexive, and moreover symmetric).12

12 This thesis states many facts, only linking to their proof. In this case, proofs can be found in the Isabelle/HOL formalization, indicated by  .

.

Trace equivalence (and preorder) give straightforward denotational semantics to programs: The sets of traces they might expose. For many formal languages, these mathematical objects can be constructed directly from the expressions of the language. With the idea that the program text “denotes” its possible executions, the set of traces is called a “denotation” in this context. CCS, as we use it, follows another approach to semantics, namely the one of “operational semantics,” where the meaning of a program is in how it might interact.

There are several reasons why computer scientists did not content themselves with trace equivalence when studying interactive systems. The core argument is that, in this context, one usually does not want to consider processes like \mathsf{P} and \mathsf{Q} to be equivalent: The two might interact differently with an environment.

Example 2.5 (Live lecture) Consider a context in which \mathsf{A} actually does not want to eat and the event of \mathsf{B} eating is followed by \mathsf{B} giving a live lecture: (\dots \mid\overline{\mathsf{b}}\ldotp\!\overline{\mathsf{lecture}}\ldotp\! \mathbf{0}) \mathbin{\backslash}\{\mathsf{a}, \mathsf{b}\} If we insert \mathsf{Q} for the “\dots” in this context, the choice after the collective \mathsf{fork}-acquisition is resolved to the \mathsf{B}-path. So the overall system chooses for \mathsf{B} to eat, and the lecture happens: (\mathsf{Q} \mid\overline{\mathsf{b}}\ldotp\!\overline{\mathsf{lecture}}\ldotp\! \mathbf{0}) \mathbin{\backslash}\{\mathsf{a}, \mathsf{b}\} \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{\tau}$}}} \cdot \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{\tau}$}}} \cdot \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{\overline{\mathsf{lecture}}}$}}} (\mathbf{0}\mid\mathbf{0}) \mathbin{\backslash}\{\mathsf{a}, \mathsf{b}\} With \mathsf{P}, an analogous path is possible. But there is, moreover, a possibility of \mathsf{A} obtaining the fork, which prevents \mathsf{B} from eating. There, the system can end up deadlocked with no lecture: (\mathsf{P} \mid\overline{\mathsf{b}}\ldotp\!\overline{\mathsf{lecture}}\ldotp\! \mathbf{0}) \mathbin{\backslash}\{\mathsf{a}, \mathsf{b}\} \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{\tau}$}}} ((\mathbf{0}\mid\mathsf{a} \mid\mathsf{P_B} ) \mathbin{\backslash}\{\mathsf{fork}\} \mid\overline{\mathsf{b}}\ldotp\!\overline{\mathsf{lecture}}\ldotp\! \mathbf{0}) \mathbin{\backslash}\{\mathsf{a}, \mathsf{b}\} \overset{\smash{\raisebox{-2pt}{$\scriptstyle{}$}}}{\nrightarrow} So, if \mathsf{B}’s teaching actually happening matters to us, we might say that scenario \mathsf{P} and \mathsf{Q} are not quite equivalent.13

13 Traditionally, this feature of trace equivalence is often framed in terms of vending machines. On https://book.pseuco.com/#/interactive/trace-equivalent-vending-machines, you can play through a comparable scenario of ordering drinks where you are the environment.

Computer scientists might not all care about philosophy lectures. But they care about the liveness of interactive programs. Therefore, they have invented many program equivalences that pay more attention to possibilities coming and going. The most prominent ones are the topic of the next subsection.

2.2.2 Similarity and Bisimilarity

The other big approach to behavioral equivalence of programs is the one of relating parts of their state spaces to one-another. The idea here is to identify which states of one program can be used to simulate the behavior of the other.

Definition 2.6 (Simulation) A relation on states, \mathcal{R} ⊆ \mathcal{P}× \mathcal{P}, is called a simulation if, for each (p, q) ∈ \mathcal{R} and α ∈ \mathit{Act} with p \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{a}$}}} p' there is a q' with q \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}} q' and (p', q') ∈ \mathcal{R}.14

definition Strong_Equivalences.lts.simulation

definition Strong_Equivalences.lts.simulationDefinition 2.7 ((Bi-)similarity) Simulation preorder, simulation equivalence and bisimilarity are defined as follows:

- p is simulated by q, p \preceq_\mathrm{S} q, iff there is a simulation \mathcal{R} with (p, q) ∈ \mathcal{R}.15

- p is similar to q, p \sim_\mathrm{S} q, iff p \preceq_\mathrm{S} q and q \preceq_\mathrm{S} p.16

- p is bisimilar to q, p \sim_\mathrm{B} q, iff there is a symmetric simulation \mathcal{R} (i.e. \mathcal{R} = \mathcal{R}^{-1}) with (p, q) ∈ \mathcal{R}.17

definition Strong_Equivalences.lts.simulated_by

definition Strong_Equivalences.lts.simulated_by abbreviation Strong_Equivalences.lts.similar

abbreviation Strong_Equivalences.lts.similar definition Strong_Equivalences.lts.bisimilar

definition Strong_Equivalences.lts.bisimilarWe also call a symmetric simulation bisimulation for short.18 Canceled symbols of relations refer to their negations, for instance, p \not\preceq_\mathrm{S} q iff there is no simulation \mathcal{R} with (p, q) ∈ \mathcal{R}.

18 Other authors use a weaker definition, namely, that \mathcal{R} is a bisimulation if \mathcal{R} and \mathcal{R}^{-1} are simulations. Both definitions lead to the characterization of the same notion of bisimilarity. The symmetric version works better for our purpose of establishing a nice link to logics and games.

Example 2.6 (Philosophical simulations) The following relations are simulations on the system of Example 2.1:

- the empty relation \mathcal{R}_\varnothing ≔ \varnothing;

- the identity relation \mathcal{R}_\mathrm{id} ≔ \mathrm{id}_{\{\mathsf{P}, \mathsf{p_a}, \mathsf{p_b}, \mathsf{p_1}, \mathsf{p_2}, \mathsf{Q}, \mathsf{q_{ab}}, \mathsf{q_1}, \mathsf{q_2}\}} = \{(\mathsf{P}, \mathsf{P}),\allowbreak (\mathsf{p_a}, \mathsf{p_a}),\allowbreak (\mathsf{p_b}, \mathsf{p_b}),\allowbreak (\mathsf{p_1}, \mathsf{p_1}),\allowbreak (\mathsf{p_2}, \mathsf{p_2}),\allowbreak (\mathsf{Q}, \mathsf{Q}),\allowbreak (\mathsf{q_{ab}}, \mathsf{q_{ab}}), \allowbreak(\mathsf{q_1}, \mathsf{q_1}), (\mathsf{q_2}, \mathsf{q_2})\};

- the universal relation between all terminal states

\mathcal{R}_\mathrm{term} ≔ \{\mathsf{p_1}, \mathsf{p_2}, \mathsf{q_1}, \mathsf{q_2}\} \times \{\mathsf{p_1}, \mathsf{p_2}, \mathsf{q_1}, \mathsf{q_2}\}; - more generally, the relation from terminal states to all other states:

\mathcal{R}_\mathrm{up} ≔ \{\mathsf{p_1}, \mathsf{p_2}, \mathsf{q_1}, \mathsf{q_2}\} × \mathcal{P}; - a minimal simulation for \mathsf{P} and \mathsf{Q}:

\mathcal{R}_\mathsf{PQ} ≔ \{(\mathsf{P}, \mathsf{Q}), (\mathsf{p_a}, \mathsf{q_{ab}}), (\mathsf{p_b}, \mathsf{q_{ab}}), (\mathsf{p_1}, \mathsf{q_1}), (\mathsf{p_2}, \mathsf{q_2})\}; - and the combination of the above \mathcal{R}_\mathrm{big} ≔ \mathcal{R}_\mathrm{id} ∪ \mathcal{R}_\mathrm{term} ∪ \mathcal{R}_\mathrm{up} ∪ \mathcal{R}_\mathsf{PQ}.

The simulation \mathcal{R}_\mathsf{PQ} shows that \mathsf{P} \preceq_\mathrm{S} \mathsf{Q}.

However, there is no simulation that preorders \mathsf{Q} to \mathsf{P}, as there is no way to simulate the transition \mathsf{Q} \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{τ}$}}} \mathsf{q_{ab}} from \mathsf{P} for lack of a successor that allows \mathsf{a} and \mathsf{b} as does \mathsf{q_{ab}}. (In Section 2.3, we will discuss how to capture such differences more formally.)

Thus, \mathsf{Q} \not\preceq_\mathrm{S} \mathsf{P}, and \mathsf{P} \nsim_\mathrm{S} \mathsf{Q}. Moreover, there cannot be a symmetric simulation, \mathsf{P} \nsim_\mathrm{B} \mathsf{Q}.

Proposition 2.2 The simulation preorder \preceq_\mathrm{S} is indeed a preorder19, and \sim_\mathrm{S} and \sim_\mathrm{B} are equivalences.20

Example 2.6 shows that similarity and bisimilarity are not implied by trace equivalence. Still, the notions are connected, which is commonly referred to as bisimilarity being “stronger” than trace equivalence.

2.2.3 Equivalence Hierarchies

Bisimilarity, similarity and trace equivalence form a small hierarchy of equivalences in the sense that they imply one-another in one direction, but not in the other. Let us quickly make this formal:

(Arrows mean implication.)

Lemma 2.1 The relation \sim_\mathrm{B} is itself a symmetric simulation.21

Corollary 2.1 If p \sim_\mathrm{B} q, then p \sim_\mathrm{S} q.22

Lemma 2.2 If p \preceq_\mathrm{S} q, then p \preceq_\mathrm{T} q.23 (Consequently, p \sim_\mathrm{S} q also implies p \sim_\mathrm{T} q.24)

We also have seen with Example 2.6 that this hierarchy of implications (visualized in Figure 2.8) is strict between trace and simulation preorder in the sense that there exist p,q with p \preceq_\mathrm{T} q but not p \preceq_\mathrm{S} q. The hierarchy also is strict between similarity and bisimilarity as the following example shows.

Example 2.7 (Trolled philosophers) Let us extend \mathsf{Q} of Example 2.3 to include a troll process (highlighted in blue) that might consume the \mathsf{fork} and then do nothing: \mathsf{T} ≔ (\overline{\mathsf{fork}} \mid{\color{RoyalBlue}\mathsf{fork}} \mid\mathsf{fork}\ldotp\! ( \mathsf{a} +\mathsf{b} )) \mathbin{\backslash}\{\mathsf{fork}\}. This adds another deadlock state to the transition system, seen in Figure 2.9.

With respect to similarity, this change is invisible, that is, \mathsf{Q} \sim_\mathrm{S} \mathsf{T}. (Formal argument: The relation \{ (\mathsf{Q}, \mathsf{T}), (\mathsf{T}, \mathsf{Q}), (\mathsf{q_{ab}}, \mathsf{t_{ab}}), (\mathsf{t_{ab}}, \mathsf{q_{ab}}), (\includegraphics[height=1em, alt=q3]{img/trollface.png}, \mathsf{q_{ab}}) \} \cup \{ \mathsf{q_1}, \mathsf{q_2}, \mathsf{t_1}, \mathsf{t_2}, \includegraphics[height=1em, alt=q3]{img/trollface.png}\} \times \{ \mathsf{q_1}, \mathsf{q_2}, \mathsf{t_1}, \mathsf{t_2}, \includegraphics[height=1em, alt=q3]{img/trollface.png}\} is a simulation.)

However, to bisimilarity, \mathsf{T} \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{τ}$}}} \includegraphics[height=1em, alt=q3]{img/trollface.png} constitutes a difference. There cannot be a symmetric simulation handling this transition as \mathsf{Q} has no deadlocked successors. Thus \mathsf{Q} \nsim_\mathrm{B} \mathsf{T}.

The equivalences we have discussed so far could also be understood as abstractions of an even finer equivalence, namely graph isomorphism:

Definition 2.8 (Graph isomorphism) A bijective function f \colon \mathcal{P}\to \mathcal{P} is called a graph isomorphism on a transition system if, for all p,p', α, the transition p \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}} p' exists if and only if the transition f(p) \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}} f(p') exists.25

definition Strong_Equivalences.lts.isomorphism

definition Strong_Equivalences.lts.isomorphism definition Strong_Equivalences.lts.is_isomorphic_to

definition Strong_Equivalences.lts.is_isomorphic_toTwo states p and q are considered graph-isomorphism-equivalent, p \sim_\mathrm{ISO} q, iff there is a graph isomorphism f with f(p) = q.26

Example 2.8 Consider the transition system in Figure 2.10. \mathsf{p_{even}} \sim_\mathrm{ISO} \mathsf{p_{odd}} because f ≔ \{\mathsf{p_{even}} \mapsto \mathsf{p_{odd}}, \mathsf{p_{odd}} \mapsto \mathsf{p_{even}}\} is a graph isomorphism.

Lemma 2.3 The relation \sim_\mathrm{ISO} is itself a symmetric simulation and thus p \sim_\mathrm{ISO} q implies p \sim_\mathrm{B} q.27

Once again, the hierarchy is strict because of bisimilarity being less restricted in the matching of equivalent states.

Example 2.9 (Graph isomorphism counts too much) Consider the processes \mathsf{P_1} ≔ (\overline{\mathsf{fork}} \mid\mathsf{fork}) \mathbin{\backslash}\{\mathsf{fork}\} and \mathsf{P_2} ≔ (\overline{\mathsf{fork}} \mid\mathsf{fork} \mid\mathsf{fork}) \mathbin{\backslash}\{\mathsf{fork}\}. \mathsf{P_1} can transition to (\mathbf{0}\mid\mathbf{0}) \mathbin{\backslash}\{\mathsf{fork}\}, while \mathsf{P_2} has two options, namely (\mathbf{0}\mid\mathbf{0}\mid\mathsf{fork}) \mathbin{\backslash}\{\mathsf{fork}\} and (\mathbf{0}\mid\mathsf{fork} \mid\mathbf{0}) \mathbin{\backslash}\{\mathsf{fork}\}. All three reachable processes are deadlocks and thus isomorphic. But \mathsf{P_1} \nsim_\mathrm{ISO} \mathsf{P_2} because no bijection can connect the one successor of \mathsf{P_1} and the two of \mathsf{P_2}. However, \mathsf{P_1} \sim_\mathrm{B} \mathsf{P_2}, as bisimilarity is more relaxed.

Graph isomorphism is the strongest equivalence, we have discussed so far. But stronger needs not be better.

2.2.4 Congruences

One of the prime quality criteria for behavioral equivalences is whether they form congruences with respect to fitting semantics or other important transformations. A congruence relates mathematical objects that can stand in for one-another in certain contexts, which, for instance, allows term rewriting. The concept is closely linked to another core notion of mathematics: monotonicity.

Definition 2.9 (Monotonicity and congruence) An n-ary function f \colon B_1 \times \dots \times B_n \to C is called monotonic with respect to a family of preorders (B_k, \leq_k) and (C, \leq) iff, for all b \in B_1 \times \dots \times B_n and b' \in B_1 \times \dots \times B_n, it is the case that b^{\vphantom{\prime}}_k \leq^{\vphantom{\prime}}_k b'_k for all k \leq n implies that f(b) \leq f(b'). We will usually encounter settings where all components use the same order {(B_1, \leq_1)} = \cdots = {(B_n, \leq_n)} = {(C, \leq)}.

The relation \leq is then referred to as a precongruence for f. If \leq moreover is symmetric (and thus an equivalence relation), then \leq is called a congruence for f.

Example 2.10 (Parity as congruence) As a standard example for a congruence, consider the equivalence relation of equally odd numbers: {\sim_\mathsf{odd}} ≔ \{(m,n) \in \mathbb{N}\times \mathbb{N}\mid m \bmod 2 = n \bmod 2\}. For instance, 1 \sim_\mathsf{odd} 3 and 0 \sim_\mathsf{odd} 42, but not 42 \sim_\mathsf{odd} 23.

\sim_\mathsf{odd} is a congruence for addition and multiplication. For instance, the sum of two odd numbers will always be even; the product of two odd numbers, will always be odd.

But \sim_\mathsf{odd} is no congruence for integer division. For instance, 2 \sim_\mathsf{odd} 4, but 2 / 2 = 1 \not\sim_\mathsf{odd} 2 = 4 / 2.

Proposition 2.3 Trace equivalence and bisimilarity on the CCS transition system (Definition 2.3) are congruences for the operators of CCS (Definition 2.2).28

28 For a generic proof of the congruence properties of trace equivalence, bisimilarity, and most other notions of the equivalence spectrum, see Gazda et al. (2020).

The nice thing about congruences is that we can use them to calculate with terms, as is common in mathematics.

Graph isomorphism fails to be a congruence for CCS operators. For instance, consider \mathsf{a}\ldotp\! (\mathbf{0}\mid\mathbf{0}) \sim_\mathrm{ISO} \mathsf{a}\ldotp\! \mathbf{0}. But if one inserts the two terms in a choice context, the results differ with respect to isomorphism: \mathsf{a}\ldotp\! \mathbf{0}+ \mathsf{a}\ldotp\! (\mathbf{0}\mid\mathbf{0}) \nsim_\mathrm{ISO} \mathsf{a}\ldotp\!\mathbf{0}+ \mathsf{a}\ldotp\!\mathbf{0}\sim_\mathrm{ISO} \mathsf{a}\ldotp\! \mathbf{0}.

By now, we have encountered some behavioral equivalences. Both, bisimilarity and trace equivalence make for fine congruences that allow calculations on process-algebraic expressions of \textsf{CCS}. Which one to choose? That there is no definitive answer is a root cause of this thesis.

Remark 2.2 (Origins of linear time and branching time). This thesis is a consequence of the classic idea that behavioral equivalences form a spectrum between linear-time notions and branching-time notions. This hierarchy corresponds to “how much” calculation is allowed on algebraic expressions of processes.

Bisimilarity is the prototypical branching-time equivalence: every decision during execution counts. Trace equivalence is the prototypical linear-time notion: only sequences of actions count. Therefore, more can be seen as equivalent than in bisimilarity.

In the concurrency theory community, two prototypical process algebras stand for this spread: CCS for bisimilarity and branching time, and CSP for trace equivalence and linear-time. CSP (“Communicating Sequential Processes”) is due to Hoare, another Turing award winner.

Hoare (1985, Section 7.4.1) summarizes the opposing design philosophies between CCS and CSP as follows:

[…] CCS is intended to serve as a framework for a family of models, each of which may make more identifications than CCS but cannot make less. To avoid restricting the range of models, CCS makes only those identifications which seem absolutely essential. In the mathematical model of [CSP] we have pursued exactly the opposite goal—we have made as many identifications as possible, preserving only the most essential distinctions. We have therefore a far richer set of algebraic laws.

The first comprehensive study on the correspondence between the hierarchy of equivalences and algebraic laws has been dubbed “linear-time–branching-time spectrum” by van Glabbeek (1990).29

Historically, therefore, this thesis originates from the tension between the works of two Turing award winners: Milner’s CCS and Hoare’s CSP. Or, as Hoare (2006) puts it: “It seems that CCS and CSP occupy extreme ends of almost every spectrum.”

But do not worry—although our research question is a consequence of the possibility to come up with various process algebras, this thesis will stick to only one, CCS.

2.2.5 Quotient Systems and Minimizations

One of the prime applications of behavioral equivalences is to battle the state space explosion problem: The transition systems of parallel processes quickly grow in size, usually exponentially with regard to the number of communication participants. But many of the states tend to be equivalent in behavior, and can be treated as one with their equals. Such minimization enables the handling of vastly bigger input models.

Example 2.11 (Trolled philosophers, minimized) In Example 2.7 of “trolled philosophers,” all terminal states are bisimilar, \mathsf{t_1} \sim_\mathrm{B} \mathsf{t_2} \sim_\mathrm{B} \includegraphics[height=1em, alt=q3]{img/trollface.png}. We can thus merge them into one state \smash{\includegraphics[height=1em, alt=q3]{img/trollface.png}}^\mathsf{m} as depicted in Figure 2.11, without affecting the behavioral properties of the other states with respect to bisimilarity, that is, \mathsf{t_{ab}} \sim_\mathrm{B} \mathsf{t_{ab}^m} and \mathsf{T} \sim_\mathrm{B} \mathsf{T^m}.

Definition 2.10 (Quotient systems) Given an equivalence relation \sim on states, and a transition system \mathcal{S}=(\mathcal{P},\mathit{Act},\xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{}$}}}), each equivalence class for p \in \mathcal{P} is defined [p]_{\sim} \mathrel{≔}\{p' \in \mathcal{P}\mid p \sim p'\}.

The quotient system is defined as {\mathcal{S} \! / \!\! \sim} \mathrel{≔}({\mathcal{P} \! / \!\! \sim}, \mathit{Act}, \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{}$}}}_{\sim}), where {\mathcal{P} \! / \!\! \sim} is the set of equivalence classes \{ [p]_{\sim} \mid p \in \mathcal{P}\}, and P \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{\alpha}$}}}_\sim P' \text{ iff there are $p \in P$ and $p' \in P'$ such that $p \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{\alpha}$}}} p'$.}

Proposition 2.4 On the combined system of \mathcal{S} and {\mathcal{S} \! / \!\! \sim_\mathrm{B}^{\mathcal{S}}}, states and their derived class are bisimilar, p \sim_\mathrm{B} [p]_{\sim_\mathrm{B}^{\mathcal{S}}}.

The same reasoning does not apply to graph isomorphism: In Example 2.11, the terminal states might be graph-isomorphic, but merging them changes the number of states and thus prevents isomorphism between \mathsf{T} and \mathsf{T^m}.

Together with the issue of congruence, we have now seen two reasons why graph isomorphism does not allow the kind of handling we hope for in behavioral equivalences. Thus, the following will focus on equivalences of bisimilarity and below. The next section will revisit a deeper, philosophical reason why bisimilarity is a reasonable limit of the properties one might care about in the comparison of processes.

2.3 Modal Logics

Modal logics are logics in which one can formalize how facts in one possible world relate to other possible worlds. In the computer science interpretation, the possible worlds are program states, and typical statements have the form: “If X happens during the execution, then Y will happen in the next step.”

Each modal logic naturally characterizes a behavioral equivalence. In this section, we show how such characterization works and argue that, for our purposes of comparative semantics, modal characterizations are a superb medium. In the next chapter, the equivalence hierarchy will turn out to be a hierarchy of modal sublogics.

2.3.1 Hennessy–Milner Logic to Express Observations

Hennessy & Milner (1980) introduce the modal logic that is now commonly called Hennessy–Milner logic (HML) as a “little language for talking about programs.” The idea is that HML formulas express “experiments” or “tests” that an observer performs interacting with a program.

Definition 2.11 (Hennessy–Milner logic) Formulas of Hennessy–Milner logic \mathsf{HML} are given by the grammar:30 \begin{array}{rcllr} φ & \;::=\;& \langle α \rangle φ & \quad\text{with } α ∈ \mathit{Act}& \text{“observation”} \\ & \;\mid\;& \textstyle\bigwedge_{i \in I}φ_i & \quad\text{with index set } I & \text{“conjunction”} \\ & \;\mid\;& \neg φ & & \text{“negation”} \\ \end{array}

datatype Hennessy_Milner_Logic.hml_formula

datatype Hennessy_Milner_Logic.hml_formulaWe also write conjunctions as sets \textstyle\bigwedge \{φ_1, φ_2…\}. The empty conjunction \textstyle\bigwedge \varnothing is denoted by \top and serves as the nil-element of HML syntax trees. We also usually omit \top when writing down formulas, e.g., shortening \langle \mathsf{a} \rangle\langle \mathsf{b} \rangle\top to \langle \mathsf{a} \rangle\langle \mathsf{b} \rangle, which says that one may observe \mathsf{a} and then \mathsf{b}.

We assume a form of associativity through the convention that conjunctions are flat in the sense that they do not immediately contain other conjunctions. Thus, we consider \textstyle\bigwedge \{ \langle \mathsf{a} \rangle, \textstyle\bigwedge \{\langle \mathsf{b} \rangle\} \} and \textstyle\bigwedge \{ \langle \mathsf{a} \rangle, \textstyle\bigwedge \{\langle \mathsf{b} \rangle\}, \top\} just as different representatives of the flattened formula \textstyle\bigwedge \{\langle \mathsf{a} \rangle, \langle \mathsf{b} \rangle\}, stating that one may observe \mathsf{a} and one may observe \mathsf{b}.

We do not restrict the size of index sets in conjunctions. In particular, infinitary conjunctions are allowed, as we will discuss in Remark 2.3.

The intuition behind HML is that it describes what sequences of behavior one may or may not observe of a system. Observations \langle α \rangle… are used to build up possible action sequences; conjunctions \textstyle\bigwedge \{…\} capture branching points in decision trees; and negations \neg… describe impossibilities.

Definition 2.12 (HML semantics) Given a transition system (\mathcal{P},\mathit{Act},\xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{}$}}}), the semantics of HML \llbracket \cdot \rrbracket \colon \mathsf{HML}→ 2^{\mathcal{P}} is defined recursively by:31

\begin{array}{rcl} \llbracket \langle α \rangle φ \rrbracket & ≔ & \{p \mid \exists p' ∈ \llbracket φ \rrbracket \ldotp p \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}} p'\}\\ \llbracket \textstyle\bigwedge_{i \in I}φ_i \rrbracket & ≔ & \bigcap_{i ∈ I} \llbracket φ_i \rrbracket\\ \llbracket \neg φ \rrbracket & ≔ & \mathcal{P}\mathbin{\backslash}\llbracket φ \rrbracket \end{array}

Example 2.12 Let us consider some observations on the system of Example 2.1.

- \llbracket \langle τ \rangle\langle \mathsf{a} \rangle \rrbracket = \{\mathsf{P}, \mathsf{Q}\} as both, \mathsf{P} and \mathsf{Q}, expose the trace τ\mathsf{a},

- \mathsf{Q} ∈ \llbracket \langle τ \rangle\textstyle\bigwedge \{\langle \mathsf{a} \rangle, \langle \mathsf{b} \rangle\} \rrbracket, but \mathsf{P} \notin \llbracket \langle τ \rangle\textstyle\bigwedge \{\langle \mathsf{a} \rangle, \langle \mathsf{b} \rangle\} \rrbracket as \mathsf{Q} leaves the choice between \mathsf{a} and \mathsf{b} to the environment.

- \mathsf{P} ∈ \llbracket \langle τ \rangle\neg\langle \mathsf{a} \rangle \rrbracket, but \mathsf{Q} \notin \llbracket \langle τ \rangle\neg\langle \mathsf{a} \rangle \rrbracket as \mathsf{P} can internally decide against \mathsf{a}.

2.3.2 Characterizing Bisimilarity via HML

We can now add the middle layer of our overview graphic in Figure 2.1: That two states are bisimilar precisely if they cannot be told apart using HML formulas.

Definition 2.13 (Distinctions and equivalences) We say that formula φ ∈ \mathsf{HML} distinguishes state p from state q if p ∈ \llbracket φ \rrbracket but q \notin \llbracket φ \rrbracket.32

abbreviation LTS_Semantics.lts_semantics.distinguishes

abbreviation LTS_Semantics.lts_semantics.distinguishes definition LTS_Semantics.lts_semantics.preordered

definition LTS_Semantics.lts_semantics.preordered definition LTS_Semantics.lts_semantics.equivalent

definition LTS_Semantics.lts_semantics.equivalentWe say a sublogic \smash{\mathcal{O}^{}_{}} ⊆ \mathsf{HML} preorders state p to q, p \preceq_{\smash{\mathcal{O}^{}_{}}} q, if no φ ∈ \smash{\mathcal{O}^{}_{}} distinguishes p from q.33 If the preordering goes in both directions, we say that p and q are equivalent with respect to sublogic \smash{\mathcal{O}^{}_{}}, written p \sim_{\smash{\mathcal{O}^{}_{}}} q.34

By this account, \langle τ \rangle\neg\langle \mathsf{a} \rangle of Example 2.12 distinguishes \mathsf{P} from \mathsf{Q}. On the other hand, \langle τ \rangle\textstyle\bigwedge \{\langle \mathsf{a} \rangle, \langle \mathsf{b} \rangle\} distinguishes \mathsf{Q} from \mathsf{P}. (The direction matters!) For instance, the sublogic \{\langle τ \rangle\langle \mathsf{a} \rangle, \langle τ \rangle\langle \mathsf{b} \rangle\} preorders \mathsf{P} and \mathsf{Q} in both directions; so the two states are equivalent with respect to this logic.

Proposition 2.5 (Transitivity for free) Consider an arbitrary HML sublogic \smash{\mathcal{O}^{}_{}} ⊆ \mathsf{HML}. Then, \preceq_{\smash{\mathcal{O}^{}_{}}} is a preorder, and \sim_{\smash{\mathcal{O}^{}_{}}} an equivalence relation.35

Lemma 2.4 (HML simulation) Hennessy–Milner logic equivalence \sim_{\mathsf{HML}} is a simulation relation.36

Proof. Assume it were not. Then there would need to be p \sim_{\mathsf{HML}} q with step p \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}} p', and no q' such that q \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}} q' and p' \sim_{\mathsf{HML}} q'. So there would need to be a distinguishing formula φ_{q'} for each q' that q can reach by an α-step. Consider the formula φ_α ≔ \langle α \rangle\textstyle\bigwedge_{q' \in \operatorname{Der}(q, α)} φ_{q'}. It must be true for p and false for q, contradicting p \sim_{\mathsf{HML}} q.

Lemma 2.5 (Bisimulation invariance) If p ∈ \llbracket φ \rrbracket and p \sim_\mathrm{B} q then q ∈ \llbracket φ \rrbracket.37

Proof. Induct over the structure of φ with arbitrary p and q.

- Case p ∈ \llbracket \langle α \rangle φ \rrbracket. Thus there is p' ∈ \llbracket φ \rrbracket with p \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}} p'. Because \sim_\mathrm{B} is a simulation according to Lemma 2.1, this implies q' with p' \sim_\mathrm{B} q'. The induction hypothesis makes p' ∈ \llbracket φ \rrbracket entail q' ∈ \llbracket φ \rrbracket and thus q ∈ \llbracket \langle α \rangle φ \rrbracket.

- Case p ∈ \llbracket \textstyle\bigwedge_{i \in I}φ_i \rrbracket. The induction hypothesis on the φ_i directly leads to q ∈ \llbracket \textstyle\bigwedge_{i \in I}φ_i \rrbracket.

- Case p ∈ \llbracket \neg φ \rrbracket. Symmetry of \sim_\mathrm{B} according to Proposition 2.2, implies q \sim_\mathrm{B} p. By induction hypothesis, q ∈ \llbracket φ \rrbracket implies p ∈ \llbracket φ \rrbracket. So, using contraposition, the case implies q ∈ \llbracket \neg φ \rrbracket.

Combining bisimulation invariance for one direction and that \sim_\mathrm{\mathsf{HML}} is a symmetric simulation (Proposition 2.5 and Lemma 2.4) for the other, we obtain that \mathsf{HML} precisely characterizes bisimilarity:

Theorem 2.1 (Hennessy–Milner theorem) Bisimilarity and HML equivalence coincide, that is, p \sim_\mathrm{B} q precisely if p \sim_\mathrm{\mathsf{HML}} q.38

Remark 2.3 (Infinitary conjunctions). In the standard presentation of the Hennessy–Milner theorem, image finiteness of the transition system is assumed. This means that \operatorname{Der}(p, α) is finite for every p ∈ \mathcal{P} and α \in \mathit{Act}. The amount of outgoing transitions matters precisely in the construction of φ_α in the proof of Lemma 2.4. But as our definition of \mathsf{HML} (Definition 2.11) allows infinite conjunctions \textstyle\bigwedge_{i \in I} …, we do not need an assumption here. The implicit assumption is that the cardinality of index sets I can match that of \operatorname{Der}(p, α). The original proof by Hennessy & Milner (1980) uses binary conjunction (\varphi_1 \land \varphi_2) and thus can only express finitary conjunctions.

2.3.3 The Perks of Modal Characterizations

There is merit in also characterizing other equivalences through sublogics \smash{\mathcal{O}^{}_{}} \subseteq \mathsf{HML}. Modal characterizations have four immediate big advantages:

Modal characterizations lead to proper preorders and equivalences by design due to Proposition 2.5. That is, if a behavioral preorder (or equivalence) is defined through modal logics, there is no need of proving transitivity and reflexivity (and symmetry).

Secondly, checking state equivalence for notions of \mathsf{HML} sublogics can soundly be reduced to checks on bisimulation-minimized systems as the transformation does not affect the sets of observations for minimized states (by combining Theorem 2.1 and Proposition 2.4).

Thirdly, modal characterizations can directly unveil the hierarchy between preorders, if defined cleverly, because of the following property.

Proposition 2.6 If \smash{\mathcal{O}^{}_{}} \subseteq \smash{\mathcal{O}^{\prime}_{}} then p \preceq_{\smash{\mathcal{O}^{\prime}_{}}} q implies p \preceq_{\smash{\mathcal{O}^{}_{}}} q for all p,q.39

Pay attention to the opposing directions of \subseteq and implication here!

Fourthly, as a corollary of Proposition 2.6, modal characterizations ensure equivalences to be abstractions of bisimilarity, which is a sensible base notion of equivalence.40

40 Among other things, bisimilarity checking has a better time complexity than other notions as will be discussed in Section 3.3.3.

In Chapter 3, we will discuss how the hierarchy of behavioral equivalences can be understood nicely and uniformly if viewed through the modal lens.

2.3.4 Expressiveness and Distinctiveness

Proposition 2.6 actually is a weak version of another proposition about distinctiveness of logics.

Definition 2.14 (Preorder of expressiveness) We say that an \mathit{Act}-observation language \smash{\mathcal{O}^{}_{}} is less or equal in expressiveness to another \smash{\mathcal{O}^{\prime}_{}} iff, for any \mathit{Act}-transition system, for each φ ∈ \smash{\mathcal{O}^{}_{}}, there is φ' ∈ \smash{\mathcal{O}^{\prime}_{}} such that \llbracket φ \rrbracket = \llbracket φ' \rrbracket. (The definition can also be read with regards to a fixed transition system \mathcal{S}.)41 If the inclusion holds in both directions, \smash{\mathcal{O}^{}_{}} and \smash{\mathcal{O}^{\prime}_{}} are equally expressive.

definition LTS_Semantics.lts_semantics.leq_expressive

definition LTS_Semantics.lts_semantics.leq_expressiveDefinition 2.15 (Preorder of distinctiveness) We say that an \mathit{Act}-observation language \smash{\mathcal{O}^{}_{}} is less or equal in distinctiveness to another \smash{\mathcal{O}^{\prime}_{}} iff, for any \mathit{Act}-transition system and states p and q, for each φ ∈ \smash{\mathcal{O}^{}_{}} that distinguishes p from q, there is φ' ∈ \smash{\mathcal{O}^{\prime}_{}} that distinguishes p from q.42 If the inclusion holds in both directions, \smash{\mathcal{O}^{}_{}} and \smash{\mathcal{O}^{\prime}_{}} are equally distinctive.

definition LTS_Semantics.lts_semantics.leq_distinctive

definition LTS_Semantics.lts_semantics.leq_distinctiveLemma 2.6 Subset relationship entails expressiveness, which entails distinctiveness.

- If \smash{\mathcal{O}^{}_{}} ⊆ \smash{\mathcal{O}^{\prime}_{}}, then \smash{\mathcal{O}^{}_{}} is less or equal in expressiveness to \smash{\mathcal{O}^{\prime}_{}}.43

- If \smash{\mathcal{O}^{}_{}} is less or equal in expressiveness to \smash{\mathcal{O}^{\prime}_{}}, then \smash{\mathcal{O}^{}_{}} is less or equal in distinctiveness to \smash{\mathcal{O}^{\prime}_{}}.44

The other direction does not hold. For instance, \mathsf{HML}⊈ \mathsf{HML}\mathbin{\backslash}\{\top\}, but they are equally expressive as \neg\neg\top can cover for the removed element. At the same time, \{\top\} is more expressive than \varnothing, but equally distinctive.

The stronger version of Proposition 2.6 is thus:

Proposition 2.7 If \smash{\mathcal{O}^{}_{}} is less or equal in distinctiveness to \smash{\mathcal{O}^{\prime}_{}} then p \preceq_{\smash{\mathcal{O}^{\prime}_{}}} q implies p \preceq_{\smash{\mathcal{O}^{}_{}}} q for all p,q.45

Remark 2.4. Through the lens of expressiveness, we can also justify why our convention to implicitly flatten conjunctions is sound: As this does not change a conjunction’s truth value, \mathsf{HML} subsets with and without flattening-convention are equally expressive and thus distinctive.

Often, an equivalence may be characterized by different sublogics. In particular, one may find smaller characterizations as in the following example for bisimilarity, which will be relevant in the upcoming game characterizations.

Definition 2.16 (Bisimulation observations) Consider \smash{\mathcal{O}^{}_{\mathrm{\smash{\lfloor B \rfloor}}}} \subseteq \mathsf{HML} described by the following grammar: \begin{array}{rcll} φ^\mathrm{\smash{\lfloor B \rfloor}} & \;::=\;& \langle α \rangle \textstyle\bigwedge_{i \in I} φ^\mathrm{\smash{\lfloor B \rfloor}}_i \\ & \;\mid\;& \neg φ^\mathrm{\smash{\lfloor B \rfloor}} \end{array}

\smash{\mathcal{O}^{}_{\mathrm{\smash{\lfloor B \rfloor}}}} is a proper subset of \mathsf{HML}. For instance, it lacks the observation \textstyle\bigwedge \{\langle \mathsf{a} \rangle, \langle \mathsf{b} \rangle\}. Due to the subset relation, \smash{\mathcal{O}^{}_{\mathrm{\smash{\lfloor B \rfloor}}}} must be less or equal in expressiveness to \mathsf{HML}, but this inclusion too is strict as \top cannot be covered for. But both logics are equally distinctive!

Lemma 2.7 \smash{\mathcal{O}^{}_{\mathrm{\smash{\lfloor B \rfloor}}}} and \mathsf{HML} are equally distinctive.46

Proof. One direction is immediate from Lemma 2.6 as \smash{\mathcal{O}^{}_{\mathrm{\smash{\lfloor B \rfloor}}}} \subseteq \mathsf{HML}.

For the other direction, we need to establish that, for each φ ∈ \mathsf{HML} distinguishing some p from some q, there is a φ' ∈ \smash{\mathcal{O}^{}_{\mathrm{\smash{\lfloor B \rfloor}}}} distinguishing p from q. We induct on the structure of φ with arbitrary p and q.

- Case \langle α \rangle φ distinguishes p from q. Thus there is p' such that p \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}} p' and that φ distinguishes p' from every q' ∈ \operatorname{Der}(q, α). By induction hypothesis, there must be φ'_{q'} ∈ \smash{\mathcal{O}^{}_{\mathrm{\smash{\lfloor B \rfloor}}}} distinguishing p' from q' for each q'. Thus \langle α \rangle\textstyle\bigwedge_{q' \in \operatorname{Der}(q, α)} φ'_{q'} ∈ \smash{\mathcal{O}^{}_{\mathrm{\smash{\lfloor B \rfloor}}}} distinguishes p from q.

- Case \textstyle\bigwedge_{i \in I}φ_i distinguishes p from q. Therefore some φ_i already distinguishes p from q. By induction hypothesis, there must be φ'_i ∈ \smash{\mathcal{O}^{}_{\mathrm{\smash{\lfloor B \rfloor}}}} distinguishing p from q.

- Case \neg φ distinguishes p from q. Thus φ distinguishes q from p. By induction hypothesis, there is φ' ∈ \smash{\mathcal{O}^{}_{\mathrm{\smash{\lfloor B \rfloor}}}} distinguishing q from p. Accordingly, \neg φ' ∈ \smash{\mathcal{O}^{}_{\mathrm{\smash{\lfloor B \rfloor}}}} distinguishes p from q.

The smaller bisimulation logic \smash{\mathcal{O}^{}_{\mathrm{\smash{\lfloor B \rfloor}}}} will turn out to be closely related to the game characterization of bisimilarity in Section 2.4.5.

2.4 Games

So far, we have only seen behavioral equivalences and modal formulas as mathematical objects and not cared about decision procedures. This section introduces game-theoretic characterizations as a way of easily providing decision procedures for equivalences and logics alike. Intuitively, the games can be understood as dialogs between a party that tries to defend a claim and a party that tries to attack it.

2.4.1 Reachability Games

We use Gale–Stewart-style reachability games (in the tradition of Gale & Stewart, 1953) where the defender wins all infinite plays.

Definition 2.17 (Reachability game) A reachability game \mathcal{G}= (G, G_{\mathrm{d}}, \mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}}) is played on a directed graph consisting of

- a set of game positions G, partitioned into

- defender positions G_{\mathrm{d}}⊆ G

- and attacker positions G_{\mathrm{a}}≔ G \mathbin{\backslash}G_{\mathrm{d}},

- and a set of game moves {\mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}}} ⊆ G × G.47

locale Equivalence_Games.game

locale Equivalence_Games.gameDefinition 2.18 (Plays and wins) We call the paths {g_0}{g_1} … ∈ G^{\infty} with {g_{i} \mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}}g_{i+1}} plays of \mathcal{G} from g_0, where G^{\infty} stands for finite and infinite words over G. The defender wins infinite plays. If a finite play g_{0}\dots g_{n}\!\mathrel{\smash{{›/\!\!\!\!\frac{\scriptstyle{\;\enspace\;}}{\;}\!\!›}}} is stuck, the stuck player loses: The defender wins the play if g_{n} ∈ G_{\mathrm{a}}, and the attacker wins if g_{n}∈ G_{\mathrm{d}}.

Usually, games are nondeterministic, that is, players have choices how a play proceeds at their positions. The player choices are formalized by strategies:

Definition 2.19 (Strategies and winning strategies) An attacker strategy is a (partial) function mapping play fragments ending at attacker positions to next positions to move to, s_{\mathrm{a}}\colon G^*G_{\mathrm{a}}\to G, where g_{\mathrm{a}}\mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}}s_{\mathrm{a}}(\rho g_{\mathrm{a}}) must hold for all \rho g_{\mathrm{a}} where s_{\mathrm{a}} is defined.

A play {g_0}{g_1} … ∈ G^{\infty} is consistent with an attacker strategy s_{\mathrm{a}} if, for all its prefixes g_0 … g_i ending in g_i \in G_{\mathrm{a}}, g_{i + 1} = s_{\mathrm{a}}(g_0 … g_i).

Defender strategies are defined analogously, s_{\mathrm{d}}\colon G^*G_{\mathrm{d}}\to G.

If s ensures a player to win, s is called a winning strategy for this player. The player with a winning strategy from g_{0} is said to win game \mathcal{G} from g_{0}.

Usually, we will focus on positional strategies, that is, strategies that only depend on the most recent position, which we will type s_{\mathrm{a}}\colon G_{\mathrm{a}}\to G (or s_{\mathrm{d}}\colon G_{\mathrm{d}}\to G, respectively).

We call the positions where a player has a winning strategy their winning region.

Definition 2.20 (Winning region) The set \mathsf{Win}_\mathrm{a}⊆ G of all positions g where the attacker wins in \mathcal{G} is called the attacker winning region of \mathcal{G}. The defender winning region \mathsf{Win}_\mathrm{d} is defined analogously.

Example 2.13 (A simple choice) Inspect the game in Figure 2.12, where round nodes represent defender positions and rectangular ones attacker positions. Its valid plays starting from (\mathsf{1}) are (\mathsf{1}), (\mathsf{1})[\mathsf{2a}], (\mathsf{1})[\mathsf{2b}], and (\mathsf{1})[\mathsf{2a}](\mathsf{3}). The defender can win from (\mathsf{1}) with a strategy moving to [\mathsf{2b}] where the attacker is stuck. Moving to [\mathsf{2a}] instead would get the defender stuck. So, the defender winning region is \mathsf{Win}_\mathrm{d}= \{(\mathsf{1}),[\mathsf{2b}]\} and the attacker wins \mathsf{Win}_\mathrm{a}= \{[\mathsf{2a}], (\mathsf{3})\}. In Figure 2.12, the winning regions are marked with blue for the defender and red for the attacker.

The games we consider are positionally determined. This means, for each possible initial position, exactly one of the two players has a positional winning strategy s.

Proposition 2.8 (Determinacy) Reachability games are positionally determined, that is, for any game, each game position has exactly one winner: G = \mathsf{Win}_\mathrm{a}\cup \mathsf{Win}_\mathrm{d} and \mathsf{Win}_\mathrm{a}\cap \mathsf{Win}_\mathrm{d}= \varnothing, and they can win using a positional strategy.48

48 This is just an instantiation of positional determinacy of parity games (Zielonka, 1998). Parity games extend reachability games by number-coloring of positions. The defender only wins the infinite plays where the least color that appears infinitely often is even. Reachability games are the subclass of parity games only colored by 0.

We care about reachability games because they are versatile in characterizing formal relations. Everyday inductive (and coinductive) definitions can easily be encoded as games as in the following example.

Example 2.14 (The \leq-Game) Consider the following recursive definition of a less-or-equal operator ≤ on natural numbers in some functional programming language. (Consider nat to be defined as recursive data type nat = 0 | Succ nat.)

( 0 ≤ m ) = True

(Succ n ≤ 0 ) = False

(Succ n ≤ Succ m) = (n ≤ m)We can think of this recursion as a game where the attacker tries to prove that some natural number on the left is bigger than the right by always decrementing the left number and challenging the defender to do the same for the right stack too. Whoever hits zero first, loses.

This means we distribute the roles such that the defender wins for output True and the attacker for output False. The two base cases need to be dead ends for one of the players.

Formally, the game \mathcal{G}_\mathsf{leq} consists of attacker positions [n,m] and defender positions (n,m) for all n,m \in \mathbb{N}, connected by chains of moves: \begin{array}{rcl} [n + 1, m] & \mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}}_\mathsf{leq} & (n, m)\\ (n, m + 1) & \mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}}_\mathsf{leq} & [n, m]. \end{array} An excerpt of the game graph below [3,1] and [1,2] is shown in Figure 2.13.

\mathcal{G}_\mathsf{leq} now characterizes \leq on \mathbb{N} in the sense that: The defender wins \mathcal{G}_\mathsf{leq} from [n, m] precisely if n \leq m. (Proof: Induct over n with arbitrary m.)

The game is boring because the players do not ever have any choices. They just count down their way through the natural numbers until they hit [0, m - n] if n \leq m, or (n - m - 1, 0) otherwise.

\mathcal{G}_\mathsf{leq} is quite archetypical for the preorder and equivalence games that we use in the following pages. But do not worry, the following games will demand the players to make choices.

2.4.2 The Semantic Game of HML

As first bigger example of how recursive definitions can be turned into games, let us quickly look at a standard way of characterizing the semantics of HML (Definition 2.12) through a game. The defender wins precisely if the game starts from a state and formula such that the state satisfies the formula.

Definition 2.21 (HML game) For a transition system \mathcal{S}= (\mathcal{P},\mathit{Act},\xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{}$}}}), the HML game {\mathcal{G}}_\mathsf{HML}^{\mathcal{S}} = (G_\mathsf{HML},G_{\mathrm{d}},\mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}}_\mathsf{HML}) is played on G_\mathsf{HML}= \mathcal{P}× \mathsf{HML}, where the defender controls observations and negated conjunctions, that is (p, \langle α \rangle φ) ∈ G_{\mathrm{d}} and (p,\neg\textstyle\bigwedge_{i \in I}φ_i) ∈ G_{\mathrm{d}} (for all φ,p,I), and the attacker controls the rest.

- The defender can perform the moves: \begin{array}{rclr} (p, \langle α \rangle φ) & \mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}}_\mathsf{HML} & (p', φ) & \text{ if $p \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}} p' \quad$ and} \\ (p, \neg{\textstyle\bigwedge_{i \in I}φ_i}) & \mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}}_\mathsf{HML} & (p, \neg φ_i) & \text{ with $i ∈ I$;} \end{array}

- and the attacker can move: \begin{array}{rclr} (p, \neg\langle α \rangle φ) & \mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}}_\mathsf{HML} & (p', \neg φ) & \text{ if $p \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}} p' \quad$ and} \\ (p, \textstyle\bigwedge_{i \in I}φ_i) & \mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}}_\mathsf{HML} & (p, φ_i) & \text{ with $i ∈ I \quad$ and} \\ (p, \neg\neg φ) & \mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}}_\mathsf{HML} & (p, φ). & \end{array}

The intuition is that disjunctive constructs (\langle \cdot \rangle\cdots, \neg\textstyle\bigwedge \cdots) make it easier for a formula to be true and thus are controlled by the defender who may choose which of the ways to show truth is most convenient. At conjunctive constructs (\neg\langle \cdot \rangle\cdots, \textstyle\bigwedge \cdots) the attacker chooses the option that is the easiest to disprove. The most trivial case for the attacker to lose is (p, \top).

Example 2.15 The game of Example 2.13 is exactly the HML game {\mathcal{G}}_\mathsf{HML}^{\mathcal{S}_\mathsf{PQ}} for formula \langle τ \rangle\neg\langle \mathsf{a} \rangle\top and state \mathsf{P} of Example 2.12 with (\mathsf{1}) ≔ (\mathsf{P}, \langle τ \rangle\neg\langle \mathsf{a} \rangle\top), [\mathsf{2a}] ≔ (\mathsf{p_a}, \neg\langle \mathsf{a} \rangle\top), [\mathsf{2b}] ≔ (\mathsf{p_b}, \neg\langle \mathsf{a} \rangle\top), and (\mathsf{3}) ≔ (\mathsf{p_1}, \neg\top).

The defender winning region \mathsf{Win}_\mathrm{d}= \{(\mathsf{P}, \langle τ \rangle\neg\langle \mathsf{a} \rangle\top), (\mathsf{p_b}, \neg\langle \mathsf{a} \rangle\top)\} corresponds to the facts that \mathsf{P} \in \llbracket \langle τ \rangle\neg\langle \mathsf{a} \rangle\top \rrbracket and \mathsf{p_b} \in \llbracket \neg\langle \mathsf{a} \rangle\top \rrbracket.

As the technicalities are tangential to this thesis, we state the characterization result without proof:49

49 A detailed presentation of a more general HML game, also extending to recursive HML, can be found in Wortmann et al. (2015, Chapter 3).

Lemma 2.8 The defender wins {\mathcal{G}}_\mathsf{HML}^{\mathcal{S}} from (p, φ) precisely if p ∈ \llbracket φ \rrbracket.

2.4.3 The Bisimulation Game

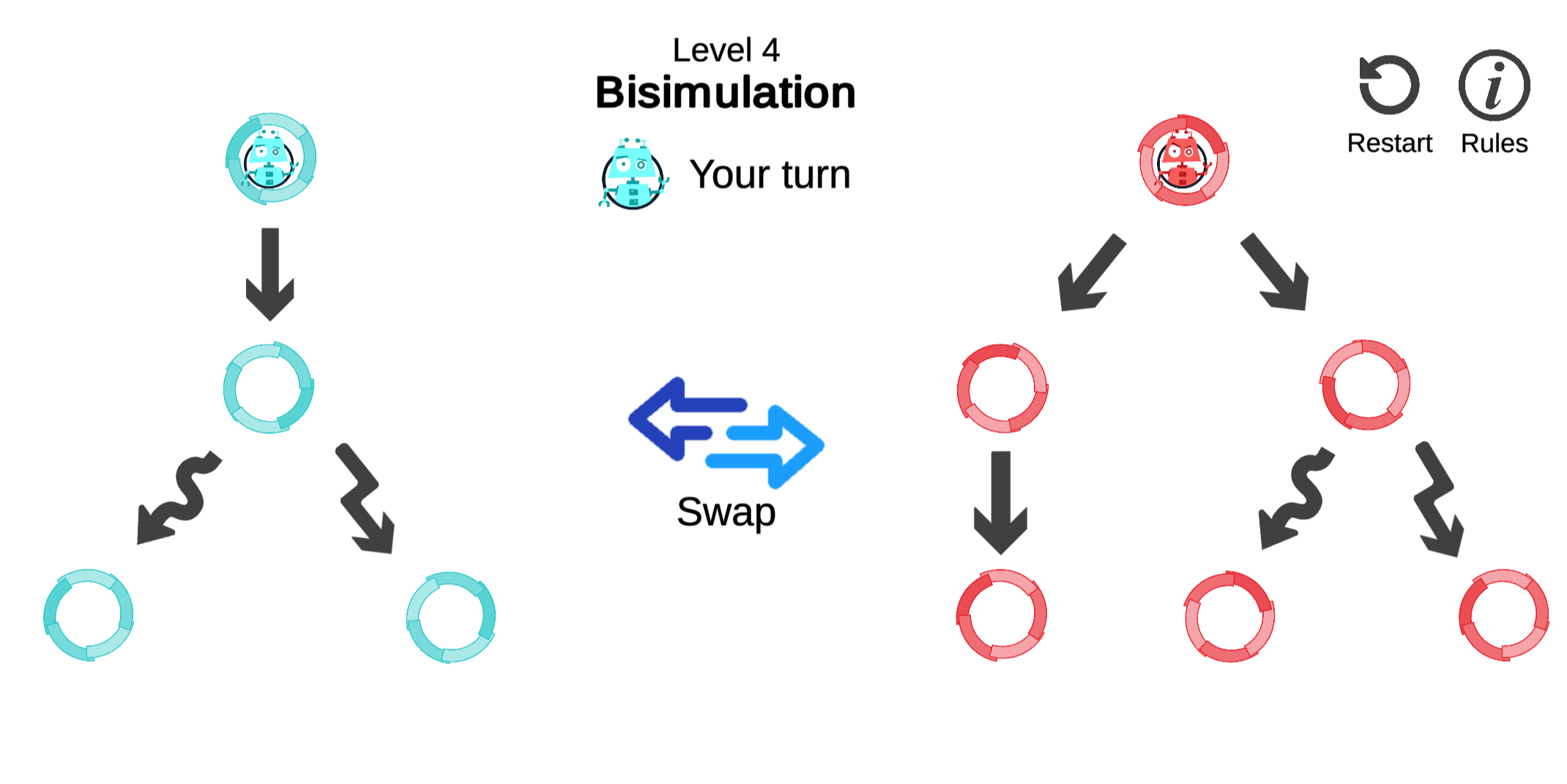

We now can add the bottom layer of Figure 2.1: That bisimilarity can be characterized through a game, where the defender wins if the game starts on a pair of bisimilar states. This approach has been popularized by Stirling (1996).

Definition 2.22 (Bisimulation game) For a transition system \mathcal{S}, the bisimulation game \mathcal{G}_{\mathrm{B}}^{\mathcal{S}} is played on attack positions G_{\mathrm{a}}≔ \mathcal{P}× \mathcal{P} and defense positions G_{\mathrm{d}}≔ \mathit{Act}× \mathcal{P}× \mathcal{P} with the following moves:50

locale Equivalence_Games.bisim_game

locale Equivalence_Games.bisim_game- The attacker may challenge simulation [p, q] \quad \mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}}_\mathrm{B} \quad (α, p', q) \quad \text{if} \quad p \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}} p',

- or the attacker may swap sides [p, q] \quad \mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}}_\mathrm{B} \quad [q, p], \vphantom{p'}

- and the defender answers simulation challenges (α, p', q) \quad \mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}}_\mathrm{B} \quad [p', q'] \quad \text{if} \quad q \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}} q'.

A schematic depiction of the game rules can be seen in Figure 2.14. From dashed nodes, the game proceeds analogously to the initial attacker position.

Example 2.16 Consider \mathsf{p_{even}} \sim_\mathrm{B} \mathsf{p_{odd}} of Example 2.8. The bisimulation game on this system is given by Figure 2.15:

Clearly, there is no way for the attacker to get the defender stuck. Whatever strategy the attacker chooses, the game will go on forever, leading to a win for the defender. That it is always safe for the defender to answer with moves to [\mathsf{p_{even}}, \mathsf{p_{odd}}] and [\mathsf{p_{odd}}, \mathsf{p_{even}}] corresponds to \mathcal{R} ≔ \{(\mathsf{p_{even}}, \mathsf{p_{odd}}), (\mathsf{p_{odd}}, \mathsf{p_{even}})\} being a bisimulation.

Example 2.17 (A game of trolls) Let us recall Example 2.7 of the “trolled philosophers,” where we determined \mathsf{Q} and \mathsf{T} to be non-bisimilar. The bisimulation game graph for the system is depicted in Figure 2.16, where we use the minimized version of \mathsf{T} from Example 2.11 to fit the game graph onto the page. (This means, technically, read \mathsf{T} to denote \mathsf{T^m}.)

The attacker can win by moving [\mathsf{Q}, \mathsf{T}] \mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}}_\mathrm{B} [\mathsf{T}, \mathsf{Q}] \mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}}_\mathrm{B} (\tau, \includegraphics[height=1em, alt=q3]{img/trollface.png}, \mathsf{Q}) \mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}}_\mathrm{B} [\includegraphics[height=1em, alt=q3]{img/trollface.png}, \mathsf{q_{ab}}] \mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}}_\mathrm{B} [\mathsf{q_{ab}}, \includegraphics[height=1em, alt=q3]{img/trollface.png}] \mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}}_\mathrm{B} (\mathsf{a}, \mathsf{q_1}, \includegraphics[height=1em, alt=q3]{img/trollface.png}) \mathrel{\smash{{›/\!\!\!\!\frac{\scriptstyle{\;\enspace\;}}{\;}\!\!›}}}_\mathrm{B}. Along this sequence of positions, the defender never has a choice and is stuck in the end. The attacker exploits, that \mathsf{T} can reach an early deadlock via \mathsf{T} \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{\tau}$}}} \includegraphics[height=1em, alt=q3]{img/trollface.png}.

Theorem 2.2 (Stirling’s game characterization) The defender wins the bisimulation game \mathcal{G}_{\mathrm{B}}^{\mathcal{S}} starting at attacker position [p, q] precisely if p \sim_\mathrm{B} q.51

Proof. Sketch for both directions:

- If \mathcal{R} is a symmetric simulation with (p_0,q_0) ∈ \mathcal{R}, then the following positional defender strategy is winning from [p_0, q_0]:52 s((α, p', q)) ≔ [p', \operatorname{choose} q'\ldotp (p', q') ∈ \mathcal{R} \land q \xrightarrow{\smash{\raisebox{-2pt}{$\scriptstyle{α}$}}} q'].

- If there is a positional defender strategy s winning from [p_0, q_0], then the following relation \mathcal{R}_s with (p_0,q_0) ∈ \mathcal{R}_s is a symmetric simulation:53 \mathcal{R}_s ≔ \{(p,q) \mid \text{there is a play $(p_0, q_0),\dots,(p,q)$ following strategy $s$}\}.

Remark 2.5 (Playing equivalence games). One of the big advantages of game characterizations is that they provide a way to discuss equivalence and inequivalence interactively among humans. An example of such a situation can be seen in Figure 2.17.

Photo: Hanna Wesner

If you want to try it at home, there also are several computer game implementations of bisimulation games.

For instance, Peacock (2020) implements a game about simulation and bisimulation as well as several weaker notions. The human plays the attacker trying to point out the inequivalence of systems according to the rules of Definition 2.22. Figure 2.18 shows a screenshot of said game. It can be played on https://games.equiv.io/play/rvg-game/.

Remark 2.6 (Number comparison as simulation game). If one plays the bisimulation game of Definition 2.22 without the swapping moves, it will characterize the simulation preorder.

Consider the family of processes \mathsf{N}_n with n \in \mathbb{N}. Define \mathsf{N}_0 ≔ \mathbf{0} and \mathsf{N}_{n + 1} ≔ \mathsf{one}\ldotp\! \mathsf{N}_n. Then, the simulation game played from [\mathsf{N}_{n}, \mathsf{N}_{m}] is isomorphic to the \leq-game \mathcal{G}_{\mathsf{leq}} from Example 2.14. Therefore, the defender wins [\mathsf{N}_{n}, \mathsf{N}_{m}] in the simulation game precisely if they win [n, m] in the \leq-game. We can compare numbers n \leq m by comparing programs \mathsf{N}_{n} \preceq_\mathrm{S} \mathsf{N}_{m}!

In this sense, comparisons of programs and of numbers are … comparable.

2.4.4 Deciding Reachability Games

All we need to turn a game characterization into a decision procedure is a way to decide which player wins a position. With this, {\mathcal{G}}_\mathsf{HML} of Definition 2.21 entails a model checking procedure for HML and {\mathcal{G}}_{\mathrm{B}} a bisimulation checking algorithm on finite-state systems.

Algorithm 2.1 describes how to compute who wins a finite reachability game for each position in time linear to the size of the game graph \mathit{O}(\smash{|{\mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}}}|}).

\begin{array}{rl} {\scriptstyle 1} & \operatorname{\textbf{\textsf{def}}}\; \mathtt{compute\_winning\_region}(\mathcal{G}=(G,G_{\mathrm{d}},\mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}})) \colon \\ {\scriptstyle 2} & \quad \mathtt{defender\_options} ≔ [g \mapsto n \mid g \in G_{\mathrm{d}}\land n = \smash{|{\{g' \mid g \mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}}g'\}}|}] \\ {\scriptstyle 3} & \quad \mathtt{attacker\_win} ≔ \varnothing \\ {\scriptstyle 4} & \quad \mathtt{todo} ≔ \{g\in G_{\mathrm{d}}\mid \mathtt{defender\_options}[g]=0\} \\ {\scriptstyle 5} & \quad \operatorname{\textbf{\textsf{while}}}\; \mathtt{todo} \neq \varnothing \colon \\ {\scriptstyle 6} & \quad \quad \mathtt{g} ≔ \operatorname{\textbf{\textsf{some}}}\; \mathtt{todo} \\ {\scriptstyle 7} & \quad \quad \mathtt{todo} ≔ \mathtt{todo} \mathbin{\backslash}\{\mathtt{g}\} \\ {\scriptstyle 8} & \quad \quad \operatorname{\textbf{\textsf{if}}}\; \mathtt{g} \notin \mathtt{attacker\_win} \colon \\ % note: the if should not be necessary for sets. {\scriptstyle 9} & \quad \quad \quad \mathtt{attacker\_win} ≔ \mathtt{attacker\_win} \cup \{\mathtt{g}\} \\ {\scriptstyle 10} & \quad \quad \quad \operatorname{\textbf{\textsf{for}}}\; \mathtt{g_{p}} \in (\cdot \mathrel{\smash{›\!\!\frac{\scriptstyle{\;\enspace\;}}{}\!\!›}}\mathtt{g}) \colon \\ {\scriptstyle 11} & \quad \quad \quad \quad \operatorname{\textbf{\textsf{if}}}\; \mathtt{g_{p}} \in G_{\mathrm{a}}\colon \\ {\scriptstyle 12} & \quad \quad \quad \quad \quad \mathtt{todo} ≔ \mathtt{todo} \cup \{\mathtt{g_{p}}\} \\ {\scriptstyle 13} & \quad \quad \quad \quad \operatorname{\textbf{\textsf{else}}}\; \colon \\ {\scriptstyle 14} & \quad \quad \quad \quad \quad \mathtt{defender\_options}[\mathtt{g_{p}}]≔\mathtt{defender\_options}[\mathtt{g_{p}}]-1 \\ {\scriptstyle 15} & \quad \quad \quad \quad \quad \operatorname{\textbf{\textsf{if}}}\; \mathtt{defender\_options}[\mathtt{g_{p}}]=0 \colon \\ {\scriptstyle 16} & \quad \quad \quad \quad \quad \quad \mathtt{todo} ≔ \mathtt{todo} \cup \{\mathtt{g_{p}}\} \\ {\scriptstyle 17} & \quad \mathsf{Win}_\mathrm{a}≔ \mathtt{attacker\_win} \\ {\scriptstyle 18} & \quad \operatorname{\textbf{\textsf{return}}}\; \mathsf{Win}_\mathrm{a} \end{array}

Intuitively, \mathtt{compute\_winning\_region} first assumes that the defender were to win everywhere and that each outgoing move of every position might be a winning option for the defender. Over time, every position that is determined to be lost by the defender is added to a \mathtt{todo} list.54

At first, the defender loses immediately exactly at the defender’s dead ends. Each defender-lost position is added to the \mathtt{attacker\_win} set. To trigger the recursion, each predecessor is noted as defender-lost, if it is controlled by the attacker, or the amount of outgoing defender options is decremented if the predecessor is defender-controlled. If the count of a predecessor position hits 0, the defender also loses from there.

Once we run out of \mathtt{todo} positions, we know that the attacker has winning strategies exactly for each position we have visited.

The following Table 2.1 lists how Algorithm 2.1 computes the winning region \mathsf{Win}_\mathrm{a}= \{[\mathsf{2a}], (\mathsf{3})\} of the simple choice game of Example 2.13.

| \mathtt{g} | \mathtt{defender\_options} | \mathtt{todo} |

|---|---|---|

| - | (\mathsf{1}) \mapsto 2, (\mathsf{3}) \mapsto 0 | (\mathsf{3}) |

| (\mathsf{3}) | (\mathsf{1}) \mapsto 2, (\mathsf{3}) \mapsto 0 | [\mathsf{2a}] |

| [\mathsf{2a}] | (\mathsf{1}) \mapsto 1, (\mathsf{3}) \mapsto 0 | \varnothing |